Introduction

LiDAR mapping is a well-known technique used for quickly generating precise geo-referenced spatial information of the Earth’s form and surface. An early 1960s technology, Light Detection and Ranging LiDAR, creates high-resolution models with an ability to see through objects such as walls, trees or any such obstacle. Using LiDAR readings, distance between different objects in space can be determined with greater accuracy, and precision thus enabling construction of a 3D digital representation of the region. Light in the form of a pulsed laser is used in LIDAR. However, the use of light to measure distance has its own shortcomings such as the inability to see dark-coloreds objects, less-reflective paint colors and also more reflective, lighter colors. The present paper presents existing challenges in LiDAR, and the various solutions provided by researchers to address the same. It also covers the various applications of LiDAR, and key players, startups and collaborations in the space.

High power requirement[1]

Most automotive companies pride themselves on the high speed, resolution, and efficiency of their autonomous vehicles. Sensors in autonomous vehicles are required to work with high accuracy. As compared to other sensors, LiDAR’s power consumption is the highest, so the batteries may be drained very quickly. This will decrease the efficiency of a car’s battery which, in turn, will affect the vehicle’s range. Lower power efficiency means the customers must recharge, or even replace parts more often as opposed to radar or other types of sensors which are power-efficient. In addition, the performance of LiDAR sensors depends on weather conditions. LiDAR cannot capture images of surroundings accurately, especially in fog, snow, or dust conditions. LiDAR sensors and radar systems that can address such problems are still in the developmental stage. A recent patent application that discloses a solution to one such problem is presented below.

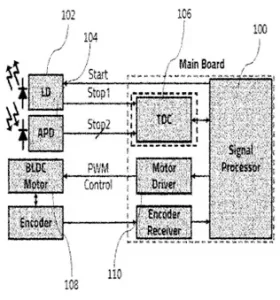

Carnavicom, in WO2022080586A1[2], has addressed the problem of high-power consumption. The system has a transmitter (102) for outputting light. A receiver (104) receives the light reflected from a target. A signal processing unit (100) measures distance of the target by using the received light. A time measurement unit (106) detects time difference between a time point at which light is emitted and a time point at which the reflected light is received. The signal processing unit changes resolution of the transmitter according to traveling speed of a moving object or a surrounding environment. The transmitter outputs the light at a resolution determined by the signal processing unit such that a number of laser outputs from the transmission unit are increased resulting in an increase in the resolution and the viewing angle range. The signal processing unit detects the time difference between a first stop signal having information on a transmission time point of light and a second stop signal having information on the reception time of reflected light at pulse period. The system can thus reduce power consumption by controlling the output resolution of the laser according to the traveling speed or the surrounding environment.

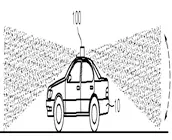

Carnavicom, in WO2022080586A1[2], has addressed the problem of high-power consumption. The system has a transmitter (102) for outputting light. A receiver (104) receives the light reflected from a target. A signal processing unit (100) measures distance of the target by using the received light. A time measurement unit (106) detects time difference between a time point at which light is emitted and a time point at which the reflected light is received. The signal processing unit changes resolution of the transmitter according to traveling speed of a moving object or a surrounding environment. The transmitter outputs the light at a resolution determined by the signal processing unit such that a number of laser outputs from the transmission unit are increased resulting in an increase in the resolution and the viewing angle range. The signal processing unit detects the time difference between a first stop signal having information on a transmission time point of light and a second stop signal having information on the reception time of reflected light at pulse period. The system can thus reduce power consumption by controlling the output resolution of the laser according to the traveling speed or the surrounding environment. Magna Electronics, in US20210253048A1[3], has addressed the thermal management problem differently. A vehicular LiDAR sensing system (12) is equipped on the vehicle (10). The speed of the vehicle is determined continuously while a vehicle cruises along the road. An initial level of electrical power is provided to the LiDAR sensor based on the determined speed. A change in driving condition, such as speed of oncoming traffic relative to the vehicle is determined. The electrical power is then adjusted according to the sensing range of the sensor, in response to the change in the driving condition.

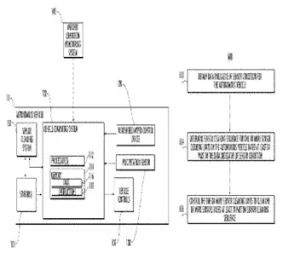

Magna Electronics, in US20210253048A1[3], has addressed the thermal management problem differently. A vehicular LiDAR sensing system (12) is equipped on the vehicle (10). The speed of the vehicle is determined continuously while a vehicle cruises along the road. An initial level of electrical power is provided to the LiDAR sensor based on the determined speed. A change in driving condition, such as speed of oncoming traffic relative to the vehicle is determined. The electrical power is then adjusted according to the sensing range of the sensor, in response to the change in the driving condition. Uatc LLC has addressed the problem of bad weather conditions which is disclosed in its US patent 10173646B1[4]. Autonomous vehicle sensors may suffer from the presence of precipitation, debris, contaminants, or any environmental object which could interfere with the ability of the sensor to collect data. Occurrence of rain, snow, frost, or other weather-related conditions can degrade the quality of the sensor data. For example, raindrops, snow, or other condensation can collect on the lens or other components of a sensor (e.g., a camera or LiDAR sensor), thereby degrading the quality of the sensor data. Dirt, dust, road salt, organic matter (e.g., “bug splatter,” pollen, bird droppings, etc.), or other contaminants may accumulate on the sensor or adhere to it (e.g., on the sensor cover, housing, or other external component of the sensor), thereby degrading the quality of the sensor data. The invention involves determining a cleaning sequence for sensor cleaning units of an autonomous vehicle based on data indicative of a sensor condition, where the sensor cleaning units clean the sensors of the autonomous vehicle. The sensor cleaning sequence comprises a set of control actions to cause the sensor cleaning units to clean the sensors according to a certain frequency.

Uatc LLC has addressed the problem of bad weather conditions which is disclosed in its US patent 10173646B1[4]. Autonomous vehicle sensors may suffer from the presence of precipitation, debris, contaminants, or any environmental object which could interfere with the ability of the sensor to collect data. Occurrence of rain, snow, frost, or other weather-related conditions can degrade the quality of the sensor data. For example, raindrops, snow, or other condensation can collect on the lens or other components of a sensor (e.g., a camera or LiDAR sensor), thereby degrading the quality of the sensor data. Dirt, dust, road salt, organic matter (e.g., “bug splatter,” pollen, bird droppings, etc.), or other contaminants may accumulate on the sensor or adhere to it (e.g., on the sensor cover, housing, or other external component of the sensor), thereby degrading the quality of the sensor data. The invention involves determining a cleaning sequence for sensor cleaning units of an autonomous vehicle based on data indicative of a sensor condition, where the sensor cleaning units clean the sensors of the autonomous vehicle. The sensor cleaning sequence comprises a set of control actions to cause the sensor cleaning units to clean the sensors according to a certain frequency.

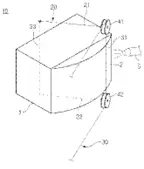

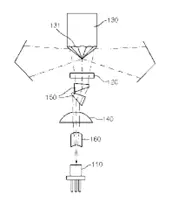

Wikioptics Co., Ltd has addressed the problem of size and cost as seen in patent no.KR101687994B1[6] The system has a laser light source (110) for irradiating laser. A collimator lens (140) changes the laser irradiated from the source to collimated light. A micro lens array (120) is installed on the route of laser passing the collimator lens. A poly-pyramid mirror (130) is passed along omni direction. A beam shaping prism (150) is installed at the front end of the micro lens array for beam-shaping the laser. Direction of the laser irradiated on the outer side is detected by using specific equation including number of mirror facets (131) and field of view (FOV) of the lens array.

Wikioptics Co., Ltd has addressed the problem of size and cost as seen in patent no.KR101687994B1[6] The system has a laser light source (110) for irradiating laser. A collimator lens (140) changes the laser irradiated from the source to collimated light. A micro lens array (120) is installed on the route of laser passing the collimator lens. A poly-pyramid mirror (130) is passed along omni direction. A beam shaping prism (150) is installed at the front end of the micro lens array for beam-shaping the laser. Direction of the laser irradiated on the outer side is detected by using specific equation including number of mirror facets (131) and field of view (FOV) of the lens array.

Velodyne is one of the first innovators in the LiDAR industry. Founded in 1983, Velodyne quickly emerged as the leader. The company has produced several models with unique focus and function. Its two main products include Solid State LiDAR and Surround LiDAR. Solid State LiDAR includes Velarray M1600 and Velabit models capable of short and long-range detection respectively. These sensors can be easily incorporated into vehicles, machinery, and robots due to their less bulky size. Velodyne’s Surround LiDAR product line focuses more on the 360-degree aspect. These models are capable of detection in inclemental weather conditions and are usually incorporated in vehicles to help with mapping and detection of objects. Velodyne has participated in the DARPA Grand Challenge. Velodyne has won many awards for its outstanding achievements and contribution to autonomous, and unmanned systems. Its products have been adopted by industry leaders such as Google, Caterpillar, Ford, Baidu, Mercedes-Benz and Nikon.

Velodyne is one of the first innovators in the LiDAR industry. Founded in 1983, Velodyne quickly emerged as the leader. The company has produced several models with unique focus and function. Its two main products include Solid State LiDAR and Surround LiDAR. Solid State LiDAR includes Velarray M1600 and Velabit models capable of short and long-range detection respectively. These sensors can be easily incorporated into vehicles, machinery, and robots due to their less bulky size. Velodyne’s Surround LiDAR product line focuses more on the 360-degree aspect. These models are capable of detection in inclemental weather conditions and are usually incorporated in vehicles to help with mapping and detection of objects. Velodyne has participated in the DARPA Grand Challenge. Velodyne has won many awards for its outstanding achievements and contribution to autonomous, and unmanned systems. Its products have been adopted by industry leaders such as Google, Caterpillar, Ford, Baidu, Mercedes-Benz and Nikon. Founded in 2012, Luminar has produced efficient products that have made it an industry leader. The company has partnered with automotive giants such as Mercedes-Benz, and Volvo, which have incorporated Luminar’s LiDAR sensors into self-driving passenger cars. Luminar has released three main models namely, Iris, Hydra, and Sentinel. The models are very different from each other and have different capabilities. Iris is extremely small, lightweight, and is capable of incorporation into different vehicles. Hydra focuses more on highway object detection within 250 meters. The Hydra model also performs at an extremely high resolution and speed. Finally, Luminar’s Sentinel model, a collaboration with Zenseact, emphasizes safety and is being incorporated into upcoming Volvo models.

Founded in 2012, Luminar has produced efficient products that have made it an industry leader. The company has partnered with automotive giants such as Mercedes-Benz, and Volvo, which have incorporated Luminar’s LiDAR sensors into self-driving passenger cars. Luminar has released three main models namely, Iris, Hydra, and Sentinel. The models are very different from each other and have different capabilities. Iris is extremely small, lightweight, and is capable of incorporation into different vehicles. Hydra focuses more on highway object detection within 250 meters. The Hydra model also performs at an extremely high resolution and speed. Finally, Luminar’s Sentinel model, a collaboration with Zenseact, emphasizes safety and is being incorporated into upcoming Volvo models. Founded in 2013, California-based AEye has focused on building safe and efficient LiDAR systems. Its 4Sight Intelligent Sensing Platform has introduced several advancements in the safety and efficiency aspects of LiDAR systems. AEye is addressing autonomy issues not only in the automotive industry, but also in infrastructure and trucking industry. While being a solid-State model, 4Sight is extremely advanced and is capable of detecting objects in all climates. 4Sight uses its advanced detection capabilities to provide safety in autonomous vehicles.

Founded in 2013, California-based AEye has focused on building safe and efficient LiDAR systems. Its 4Sight Intelligent Sensing Platform has introduced several advancements in the safety and efficiency aspects of LiDAR systems. AEye is addressing autonomy issues not only in the automotive industry, but also in infrastructure and trucking industry. While being a solid-State model, 4Sight is extremely advanced and is capable of detecting objects in all climates. 4Sight uses its advanced detection capabilities to provide safety in autonomous vehicles. The Opal LiDAR system is a highly advanced model that is capable of performing unhindered during inclemental weather conditions due to its extremely high resolution of 300,000 points per second. The field of view of this model is highly advanced and it is possible to capture objects within 360°. This device is designed to assist in landing and takeoff during flights. Its ability to detect obstacles on the runway helps decide whether the area is clear and where to land the aircraft. The company is currently working on building a model of an autonomous aircraft that can fly with minimal pilot intervention.

The Opal LiDAR system is a highly advanced model that is capable of performing unhindered during inclemental weather conditions due to its extremely high resolution of 300,000 points per second. The field of view of this model is highly advanced and it is possible to capture objects within 360°. This device is designed to assist in landing and takeoff during flights. Its ability to detect obstacles on the runway helps decide whether the area is clear and where to land the aircraft. The company is currently working on building a model of an autonomous aircraft that can fly with minimal pilot intervention.